Adding vmnet support to QEMU

Reading time: 11 minutes

Every hobby operating system developer dreams of the day that a stack wrought from their own blood, sweat, and keystrokes renders its first webpage.

Back in early 2021, I decided to break ground on the first step towards this goal.

Before we can connect to the web, we need to handle all the necessary protocols to exchange packets over both the web and our humble local network link. This includes implementing protocols such as TCP, DNS, and ARP.

But before we even think about talking in protocols, we need the bare-bones: some way to send packets out, and get packets in.

This job of transmitting and receiving packets (TX and RX, respectively) belongs to a dedicated piece of hardware in the machine. It might be an Ethernet controller, or a WiFi radio; whether wired or wireless, this hardware is referred to as a network interface controller (or NIC). We’re not going for anything fancy here, so I started axle off with a driver for a relatively primitive NIC: the venerable RTL8139.

The RTL8139 has something of a reputation in the OSDev space for being one of the more straightforward NICs for the intrepid driver author. Datasheet and programmer’s guide in hand, our eyes sparkling and our tails bushy, it’s time to dive in.

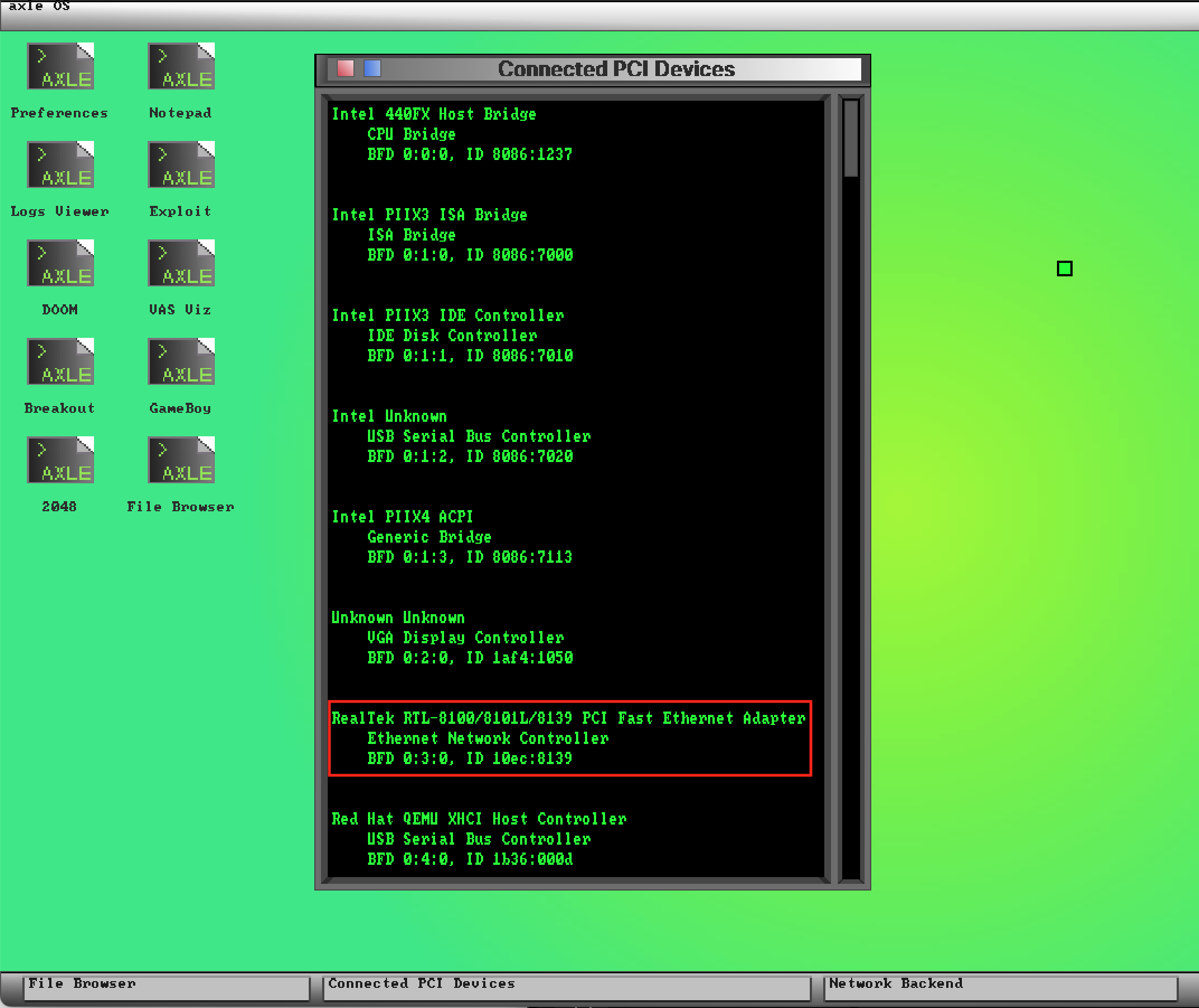

Since the NIC will be connected over the PCI bus, this is a good time to hammer out a userspace PCI driver. The PCI subsystem should also allow other drivers to talk to it, so it can provide info on what devices are connected, and allow the other drivers to read and write to a PCI device’s configuration space.

Hey there RTL8139! Now that we can see and chat to you, we’ll configure your knobs and prime your buffers.

With that out of the way, let’s transmit a packet!.

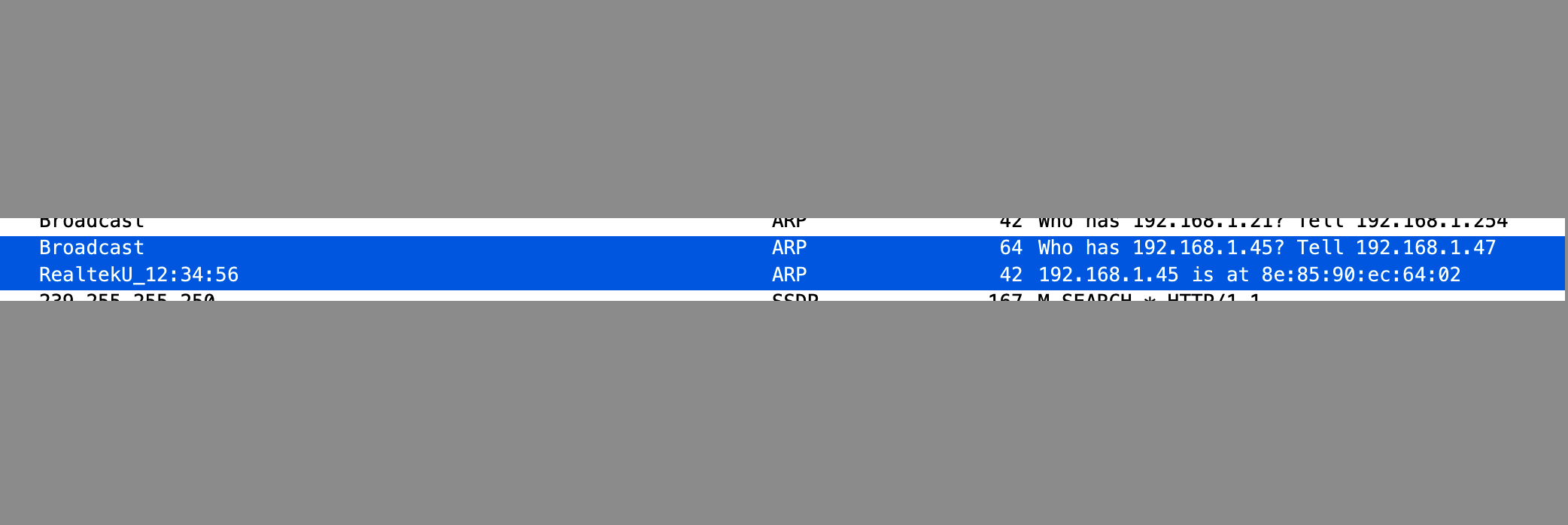

Whoa! We sent an ARP request to my real-life WiFi router, and my router responded! Now we’re cooking.

Hmm, I can see the router’s response in Wireshark, but my driver is never running its packet-received handler. No worries, I probably just misconfigured something. Time to hunt some bugs!

That’s strange. Everything seems to be configured correctly, and no matter what I try to force a packet delivery it just won’t kick. Is anyone else feeling itchy?

Let’s just try tweaking the way we’re configuring the hardware some more. I’m sure this’ll all blow over soon.

Two weeks later

I’ve aged. Youth has fled and I am a husk, adrift on the capricious wind. I can’t get these fucking packets to show up for the life of me.

Desperation sets in

OK, I’ve checked this driver top-to-bottom. No matter what I try, the NIC’s packet-received interrupt never fires. I cannot see what else could be wrong with my driver.

Maybe there’s some kind of bug with my PCI code?

I have checked other open-source drivers. I have memorized the RTL8139 manual, though its letters shift and reform themselves before my eyes. I have considered human sacrifice. I have progressed through every stage of grief.

Hmm, better check my interrupt routing.

Drought and famine ravage the diseased plains of my mind. All that remains of my sanity is the empty claim that I still command it. I insist no, I don’t need a break thank you, and how’s the weather anyway?

Normal coping mechanisms exhausted, it’s time to consider the unthinkable: could this be a QEMU bug?

Delving into QEMU

I cloned the repo, set up the build system, and got to work poking around in the QEMU networking internals. QEMU has a few different ways of setting up networking with the guest operating system.

- ‘User’ networking

- This mode obscures the low-level packets from the guest OS, and essentially just plumbs in a full-blown TCP/IP interface to the guest. There’s a full network stack running within QEMU, which is inaccessible to the guest OS. This precludes the guest OS from doing its own stuff with the network, such as sending ICMP packets.

- ‘Tap’ networking

- This mode provides a ‘raw’ network interface to the guest OS, by installing and exposing a ’tap’ on the host OS’s network stack.

Tap networking is ideal for axle’s use case, as I want to get all up in there with the dirty dance of the network. While attaching a tap to the NIC is supported out-of-the-box on Linux, we’ll need to install the TunTap kernel extension to achieve this on macOS.

When configuring QEMU to use tap networking, QEMU reads from the tap via a special device file, /dev/tap0. Write to the file, and the tap will inject packets into the macOS network stack. Read from the file, and the tap will provide you with whatever packets have been sent to the virtual interface.

Digging around in QEMU’s event loop, I found that it uses glib to manage responding to events from device files whenever one has something ready to read. This way, QEMU can asynchronously listen for work from device files representing keyboard input, mouse input, network input, etc.

Hmm, could it be that no data is showing up when QEMU tries to read from the tap device file? Let’s test it ourselves with a quick script.

f = open("/dev/tap0", "r")

while True:

r = select([f.fileno()], [], [])[0][0]

packet = os.read(f.fileno(), 2048)

print(packet)Running this shows that /dev/tap0 definitely has data to read when a packet hits the tap. Why doesn’t QEMU’s event loop, then, register that it has received a packet?

After much consternation, I sank to a new low: I joined IRC.

→ Joined channel #qemu

<codyd51> Hello! Apologies if this is the wrong place~ I've been digging into QEMU due to what may be a bug. I'm running QEMU on a macOS Big Sur host and configuring it with a tap device. However, QEMU never reads from the tap device even when it has data available to read.

<codyd51> I've confirmed this with several test programs that `open()` and `select()` on the tap device, so I'm sure that the tap device does indeed have data to read. I've also confirmed that QEMU provides its file descriptor for the tap device to glib's polling mechanism, and yet the file descriptor doesn't ever seem to be read from.

<stsquad> are you saying the main loop is stuck in a `poll()` that is never serviced? is this a macOS bug?

<codyd51> I don't know for sure that it's never serviced. As a quick hack I threw a `select()` on the tap's file descriptor into `async.c:aio_ctx_dispatch`, and it does indeed have data available to read

<stsquad> AIUI it should end up with the handlers set up by `tap_update_fd_handler`

<codyd51> My trace agrees: this is called on startup, with FD #13 being my tap device `tap_update_fd_handler(fd=13, readpoll=1, writepoll=0, enabled=1, usingvnet=0, hostvnet=10, backend=0)`

<stsquad> if you stick a break in `tap_read_packet` does it never get called?

<codyd51> `tap_read_packet()` is never called. `tap_read_poll()` is, though it appears that just switches whether the read-poll is enabled or not.

<codyd51> `aio_dispatch_handler(ctx, node)` is never called with `node->pfd.fd == tap_fd`

<codyd51> Oh, I'm mistaken: `aio_dispatch_handler(13)` is called once on startup, just after `qemu_net_queue_flush`, and once on Ctrl+C, just after `aio_dispatch_ready_handlers`

<codyd51> On startup's `qemu_net_queue_flush()` -> `aio_dispatch_handler("io-handler", tap_node)`, `tap_node->pfd.revents & G_IO_NVAL` is set... This seems to indicate that glib thinks the tap file descriptor is invalid for some reason?We’ve got a lead, folks.

- QEMU’s machinery that kicks into action when the tap device has a packet available is never invoked.

- If I

select()from the tap device file at various points within QEMU, the device file correctly returns packet data. - When QEMU sets up its

poll()on the tap device file, glib throws an error saying the file descriptor is invalid.

Tango, one two. The bug and I circle each other, wary-eyed, blades drawn. I strike; the bug parries and dives left, but stumbles.

<codyd51> Re: tap device not being polled and instead being reported as `G_IO_NVAL` upon opening: replacing `aio-posix.c:aio_set_fd_handler()`'s call to `g_source_add_poll()` with `g_source_add_unix_fd()` results in `G_IO_NVAL` no longer being sent.

<stefanha> QEMU uses an event loop so it won't try to read directly from the tap file descriptor.

<codyd51> I hacked up `os_host_main_loop_wait()` to `select()` on the tap, to ensure data really is showing up (it is): https://ghostbin.co/paste/2ebbg

<codyd51> The only FD I ever see notified by `aio_dispatch_handler` is #8, which appears to be some sort of pipe. None of the other FDs `[-1, 0, 3, 6, 10, 13]` are notified. What might be a good way to debug this?

<stefanha> Interesting. Maybe the problem is that the `poll(2)` event mask is missing the event that the macOS tap device is reporting.

<stefanha> That would explain why `select(2)` detected activity on the fd but you're seeing that `g_poll()` does not.

<codyd51> Ah ha, I'm so glad to hear I'm not going crazy!

<codyd51> `g_poll()` seems fine with the same bitmask QEMU passes, unless I'm missing something. A `read()` throws "I/O error" for a bit until the tap interface is up, then reads start succeeding when the device has data (and sometimes throws "Resource temporarily unavailable", I assume when I read everything available): https://ghostbin.co/paste/ve5p6

<stefanha> Did you look at the value of revents after `g_poll()` returns?

<codyd51> I didn't before, but just did: `G_IO_NVAL` (!)

<codyd51> I suppose this is why I was able to hit "Resource temporarily unavailable" even though my `read()` was protected by `g_poll()`: `g_poll()` wasn't working at all

<codyd51> It's not clear to me why `G_IO_NVAL` is being returned at all, though - the file descriptor is valid in the sense that it corresponds to an open file and can (occasionally) be read fromGasp! Shock and awe! Fireworks light up the sky and we’re going to live a thousand lifetimes.

<stefanha> Perhaps the macOS tap driver returns `POLLNVAL` if the interface is not ready yet (e.g. `IFFDOWN`)

<codyd51> From a quick test, it returns `POLLNVAL` even after the interface comes up https://ghostbin.co/paste/c2pc3.

<codyd51> I confirmed this with another quick test: `select()` on a tuntaposx device works fine, `poll()` is borked https://ghostbin.co/paste/mrxefOh my god, I’m going to cry: whether from relief or delirium it has become difficult to say.

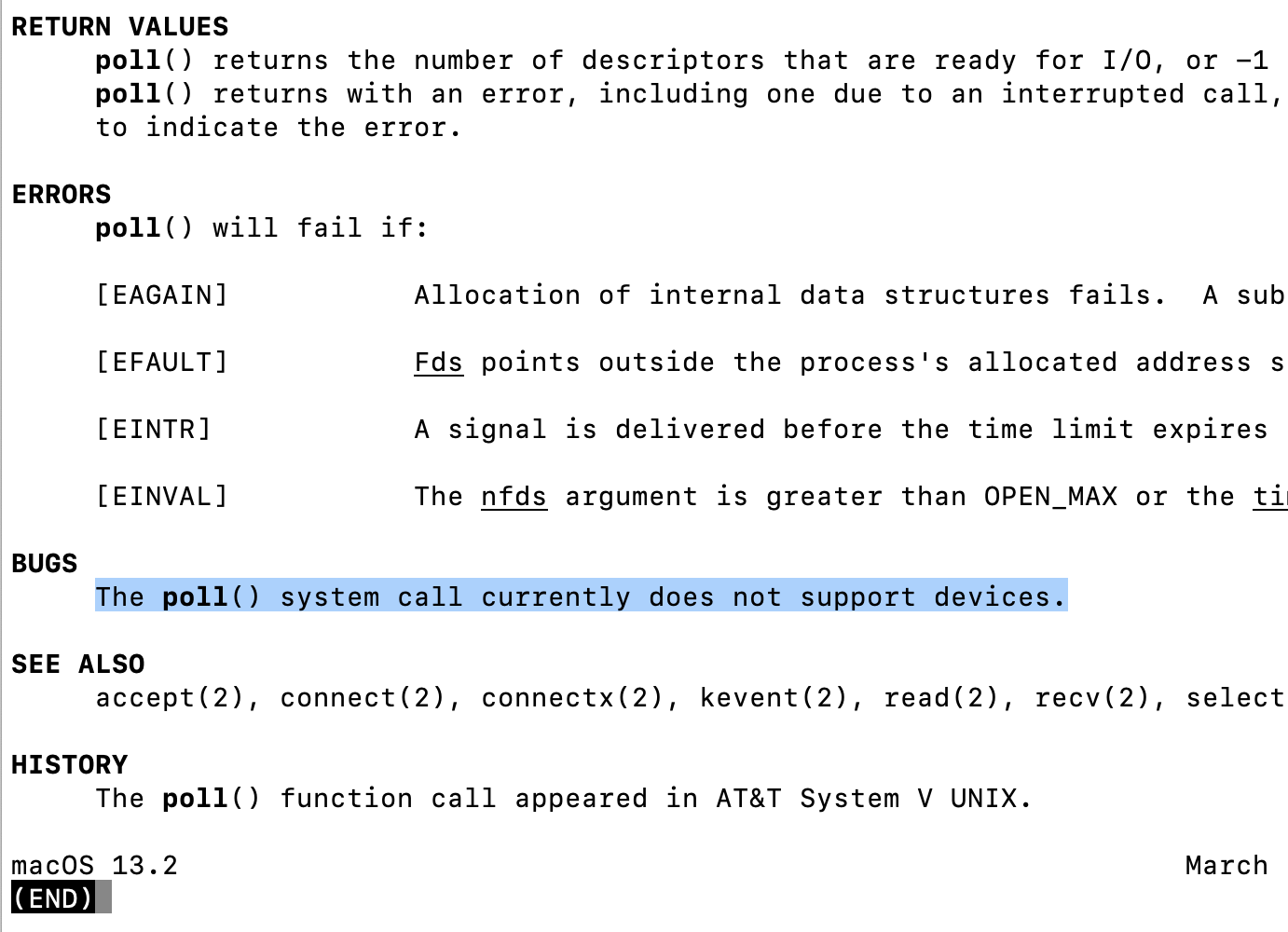

<pm215> codyd51: osx poll(2) manpage https://developer.apple.com/library/archive/documentation/System/Conceptual/ManPages_iPhoneOS/man2/poll.2.html says "BUGS The poll() system call currently does not support devices." which sounds like what you're seeing.

<codyd51> oh, wow

<stefanha> :(

<stefanha> That explains why the `select()` method is so simple and does not return `POLLNVAL`: https://sourceforge.net/p/tuntaposx/code/ci/master/tree/tuntap/src/tuntap.cc#l742

<stefanha> Maybe `poll(2)` isn't wired up to this in a useful way.

<codyd51> Well, I'm glad to finally understand what's going on here, as I've been banging my head against it for quite a while; the original thing that started me on this was writing an RTL8139 driver for my hobby OS a few weeks ago. Thank you sincerely for your help digging into this.Behold, my shame, my glory.

To recap:

- In order to create a virtual network interface that can be wired up on one end to QEMU, and on the other to my Mac’s network stack, I used the

tuntapkernel extension. - This kernel extension creates a special device file,

/dev/tap0, where writing to it causes packets to be sent from my Mac, and reading from it yields packets that were received by the virtual interface. - On its own, and in my manual tests, the

tuntapkernel extension works great: you canopen()it,write()to it,read()from it, and you’re sending and receiving packets just like you’d hope. - QEMU has to manage lots of inputs: it uses

poll()instead ofselect()to ask the OS to notify it when a file or pipe has new data to read. - Therefore, QEMU is trying to react to events on the tap file by passing the tap file to

poll(). - macOS’s implementation of

poll()just straight up doesn’t support device files:

>>> import errno

>>> import select

>>> listener = select.poll()

>>> with open("/dev/tap0", "r") as f:

>>> device_file_descriptor = f.fileno()

>>> print(f"File descriptor for the device file: {device_file_descriptor}")

>>> listener.register(device_file_descriptor)

>>> for fd, event_bitmask in listener.poll():

>>> print(f"Received event for file descriptor {fd}: {errno.errorcode[event_bitmask]}")File descriptor for the device file: 11

Received event for file descriptor 11: EINVALUsing a tap device with QEMU on macOS never worked. By my eye, until I ran into this there wasn’t any way to expose a raw network interface with QEMU on a macOS host. We’ve found the bug, just not in the OS I expected.

This would be a great place to stop, if we had any restraint. We’ve found the bug, saved the rainforest, rescued the town from the clutches of those malignant ghouls who would do it harm. But we’re here to build axle’s network stack, and by golly we’re going to! We need some way to set up a network interface, and using the tap device is not an option. Where do we go from here?

Someone on IRC name-dropped vmnet. Let’s take a look.

The vmnet framework is an API for virtual machines to read and write packets.

Wow, no beating around the bush with this one! I’m blushing.

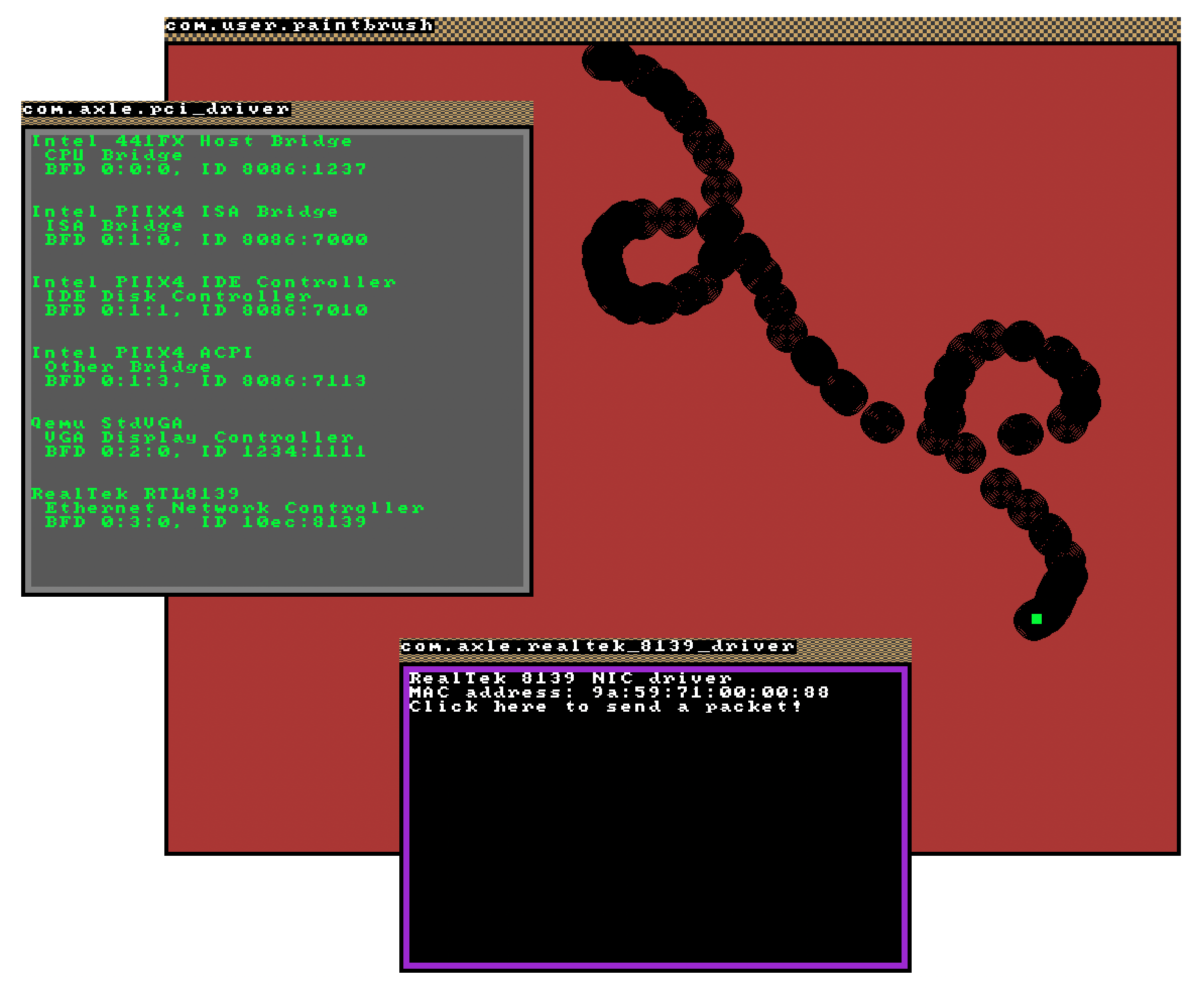

The Great Baton of Destiny in the Sky has deigned that I be the one to add support for vmnet to QEMU, and add support I shall. I introduced a new network backend, a separate option from the User and Tap architectures mentioned above, that relies on vmnet for packet transmit and delivery, and injects the packets into QEMU’s network stack. I fired up axle within my custom QEMU build, gave another try to my RTL8139 driver, and, hey presto, I could finally receive packets.

With my patch, QEMU can now be started with the following invocation to instruct it to use the vmnet-based network backend:

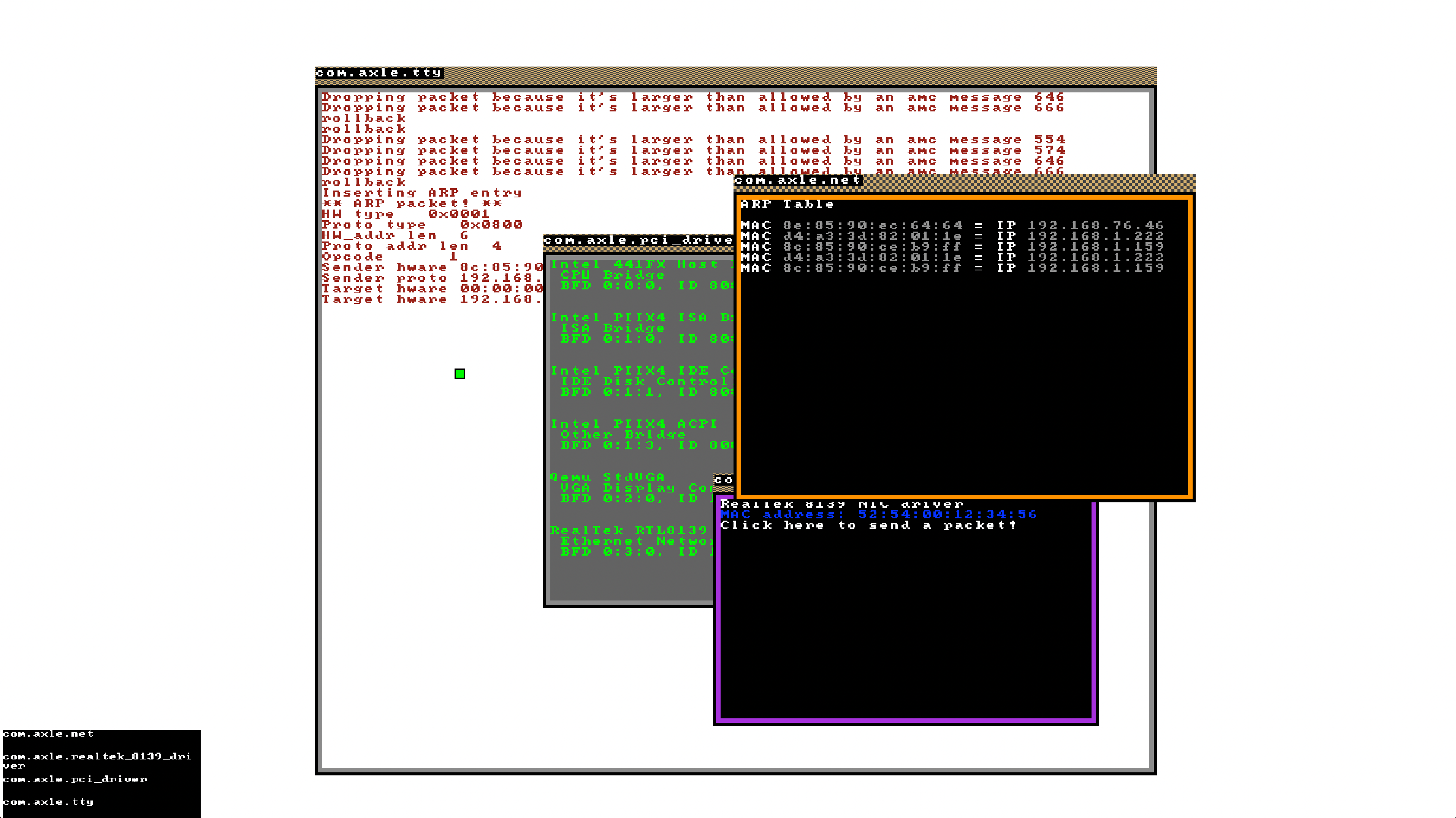

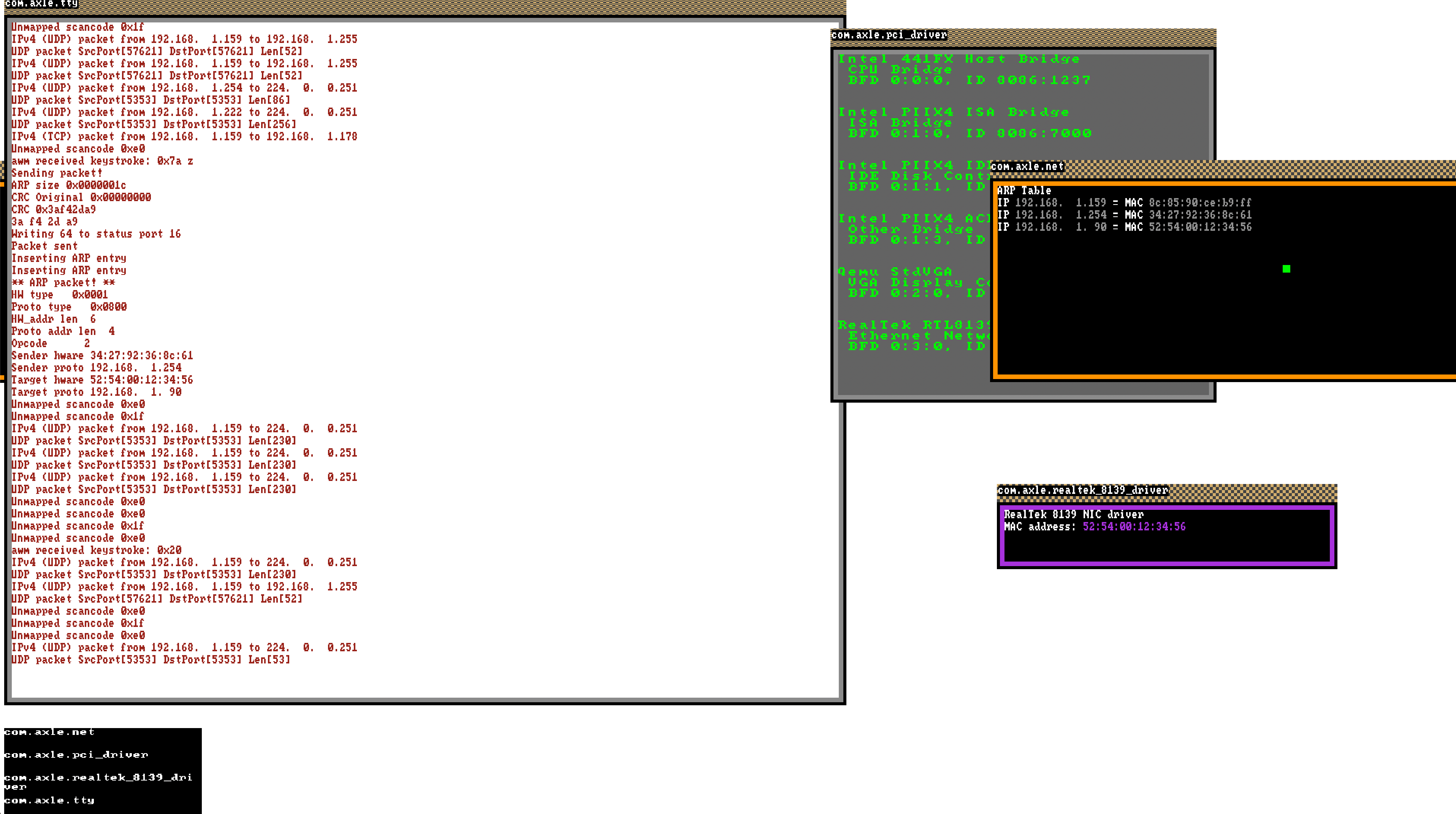

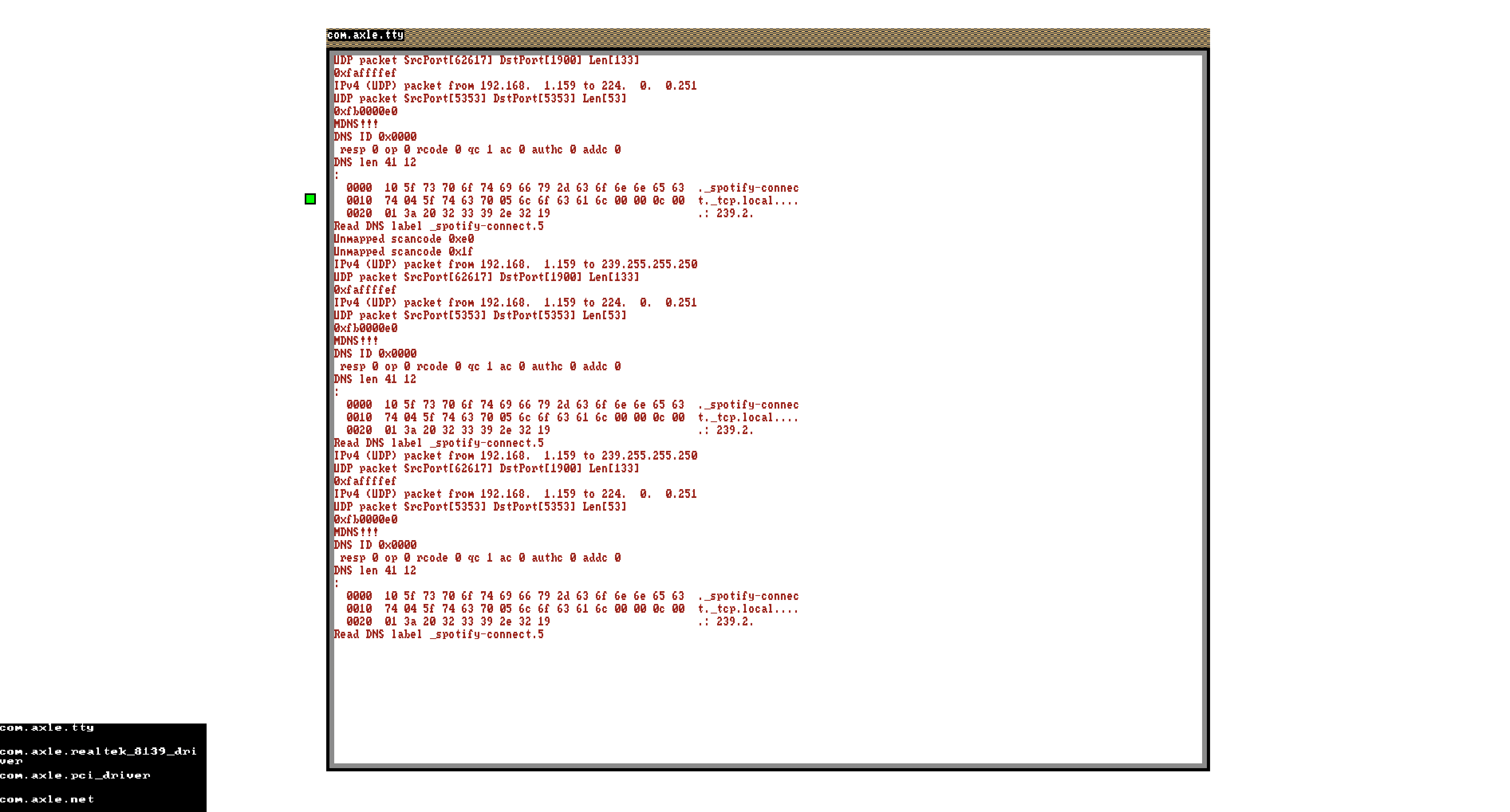

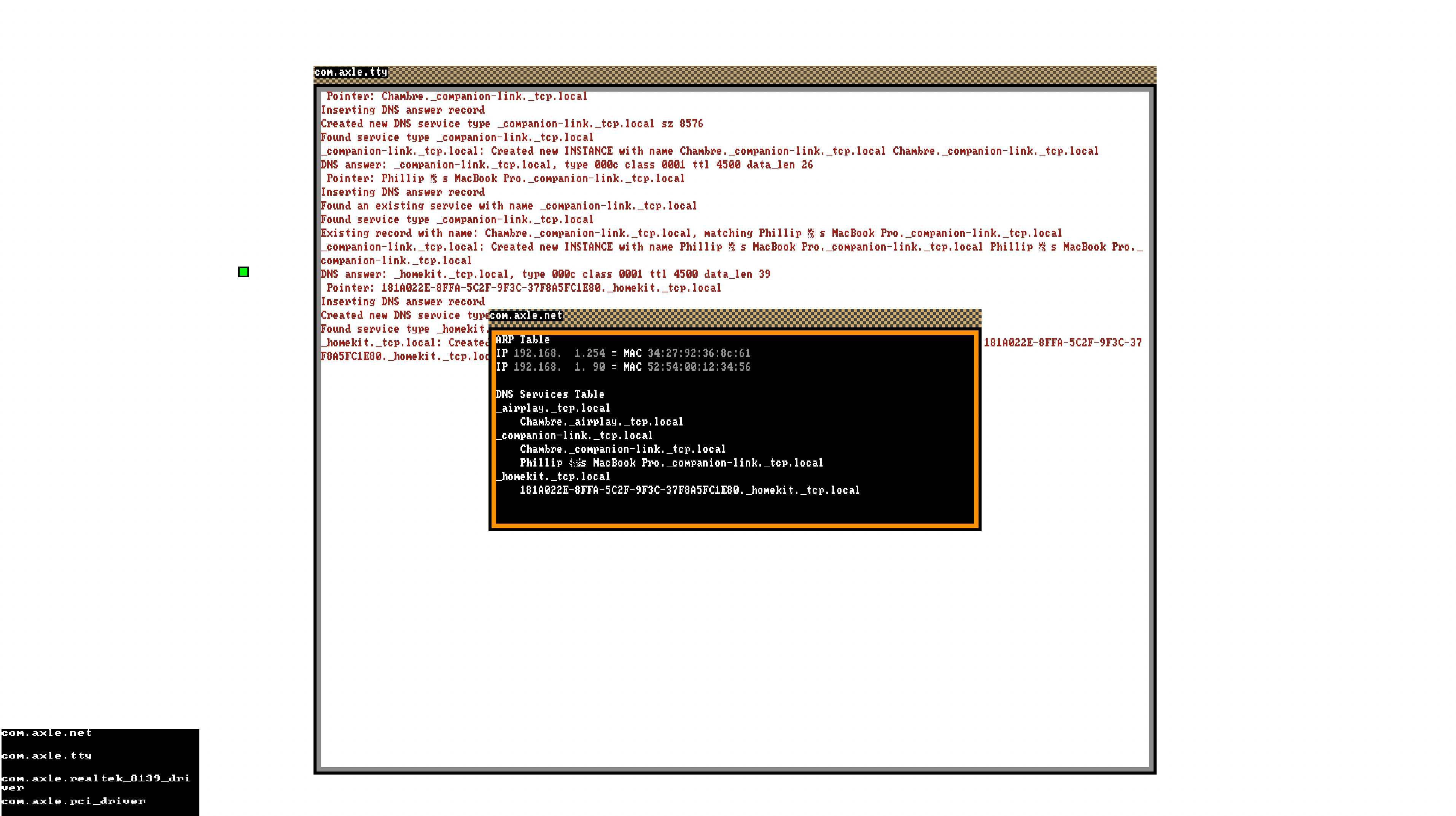

qemu-system-x86_64 -netdev vmnet-macos,id=vmnet -device rtl8139,netdev=vmnetAnd we’re off! What follows are a few screenshots from the frenzied period of development after I got packet reception working, as I gleefully used this hard-earned toy to implement a full TCP/IPv4 stack, including various other protocols such as ARP, DNS, and UDP. I was clearly doing lots of UI development at the time too. It’s wild, in hindsight, to see axle’s interface change so much in these short months!

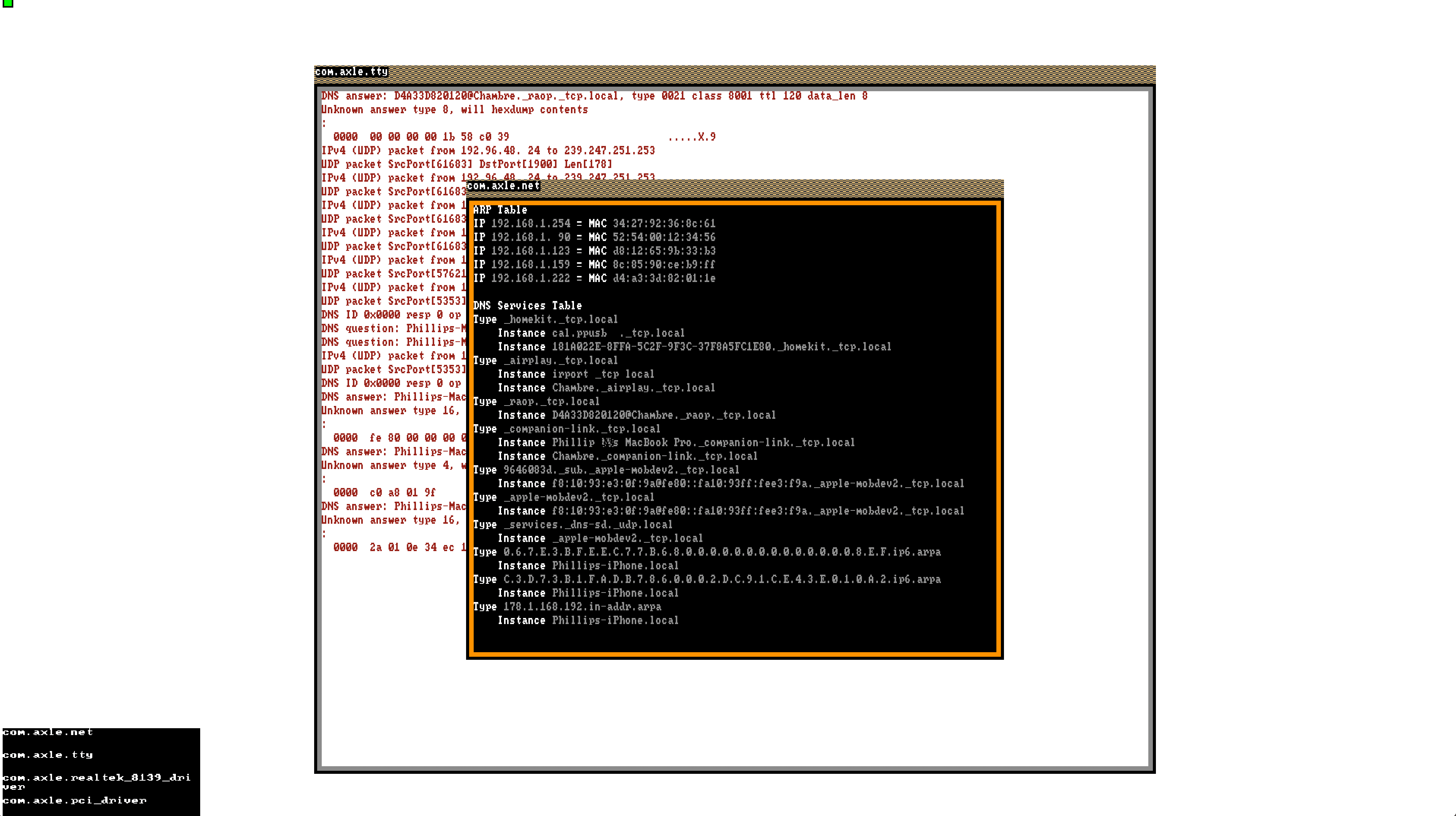

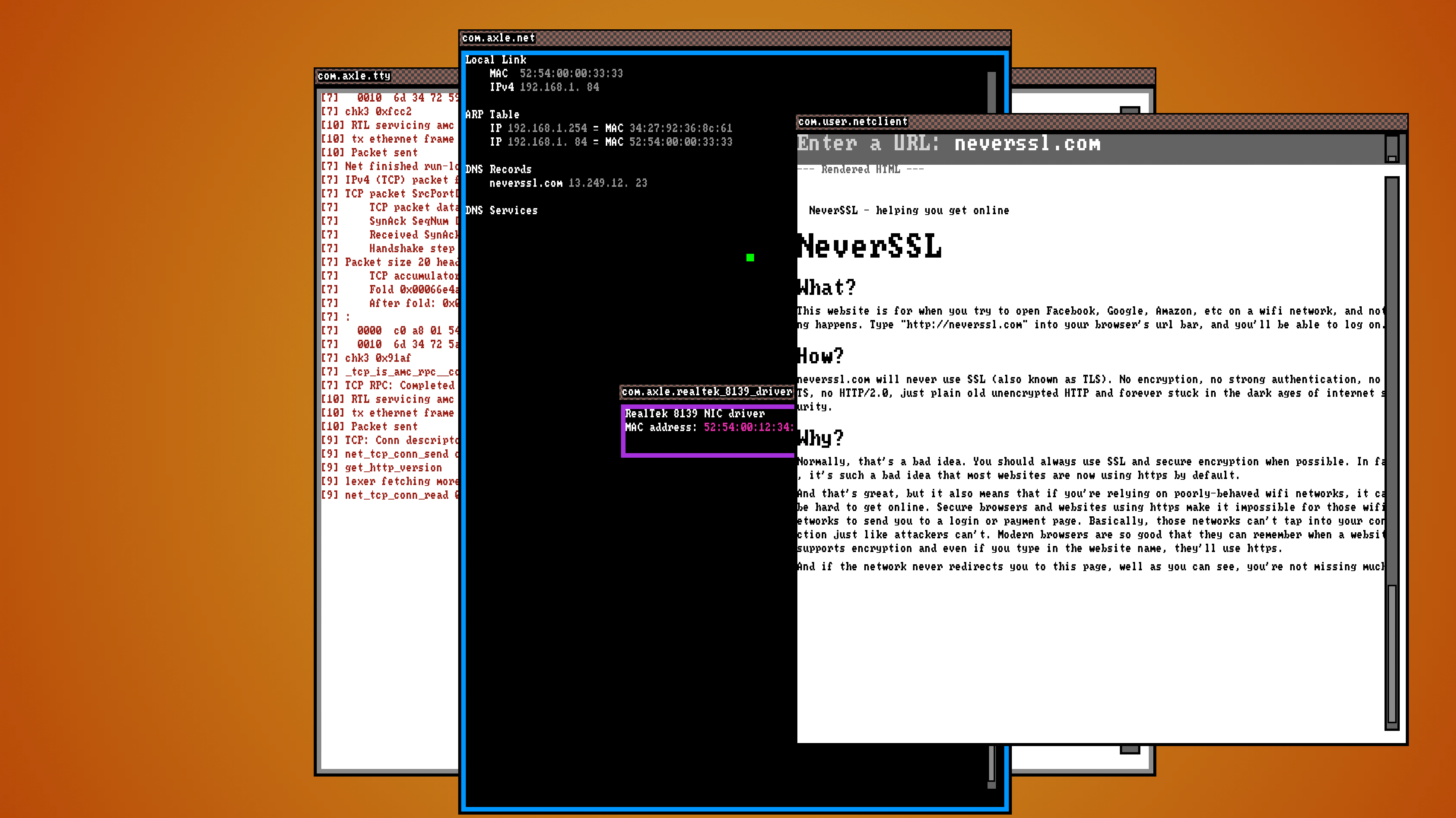

Feb 2021, GUI frontend for the RTL8139 driver

Feb 2021, visualizing ARP tables

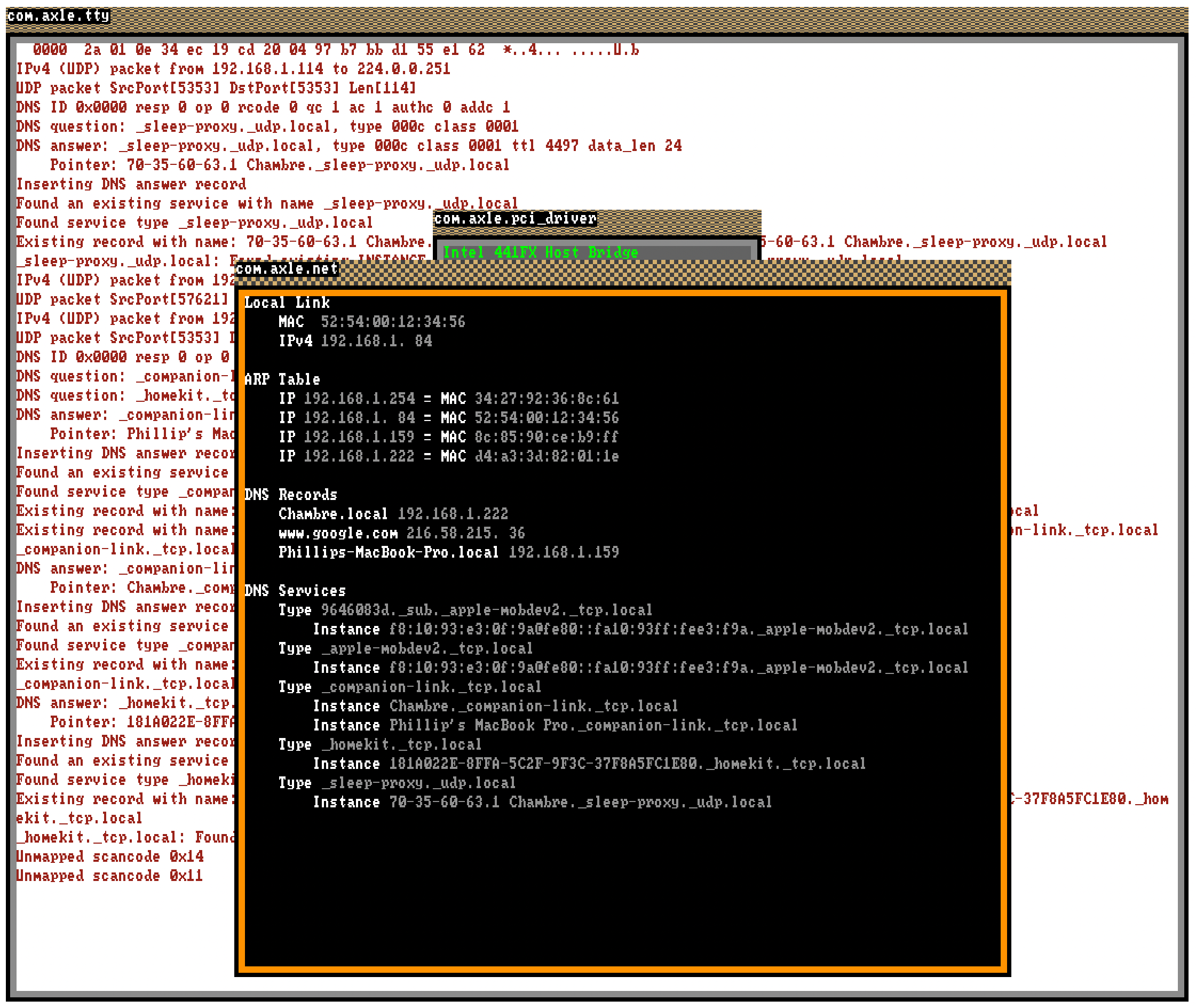

Feb 2021, ARP tables with better font rendering

Feb 2021, dumping DNS packets

Feb 2021, visualizing mDNS services

Feb 2021, mDNS services with a facelift

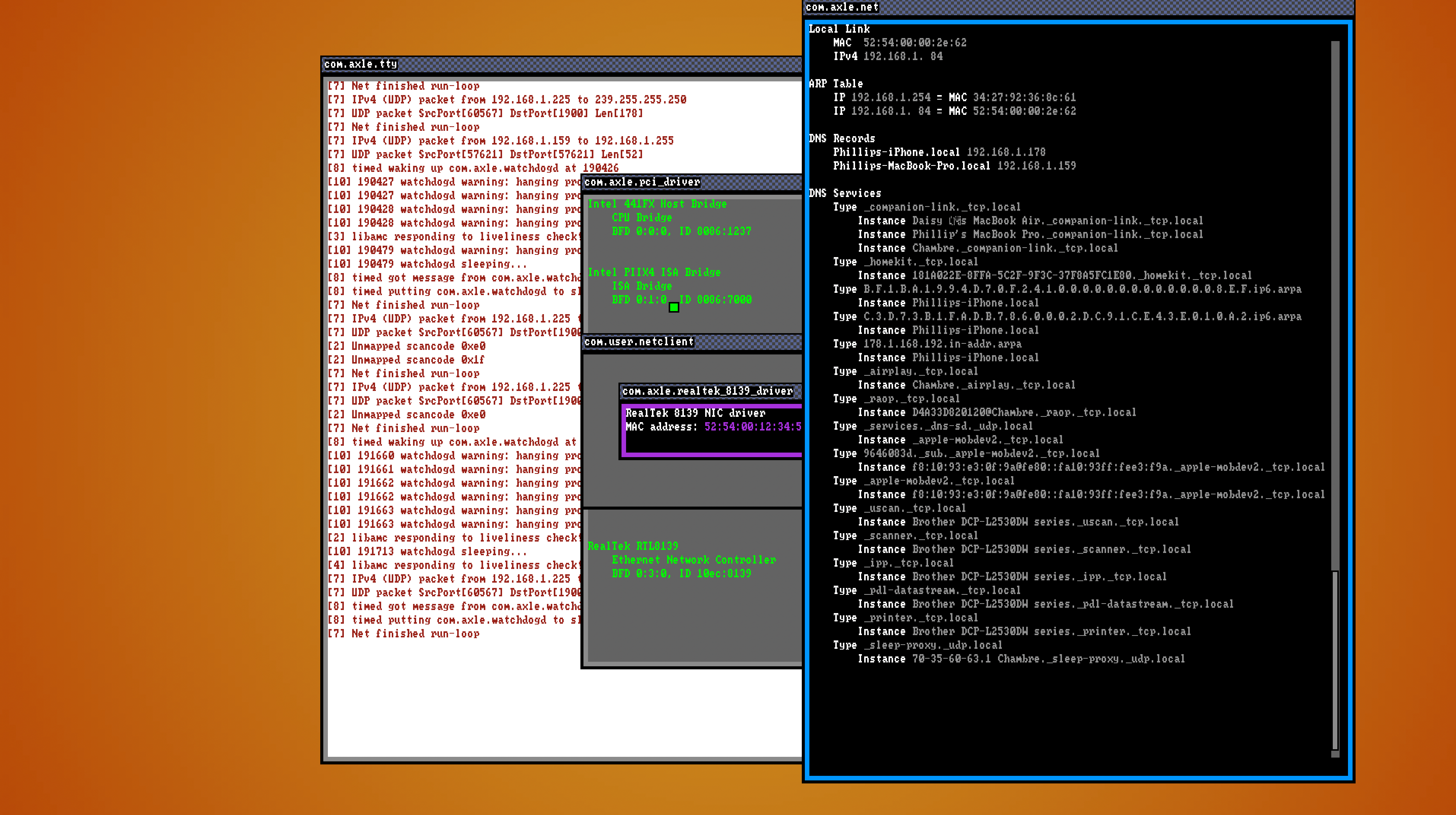

Feb 2021, remote DNS lookup

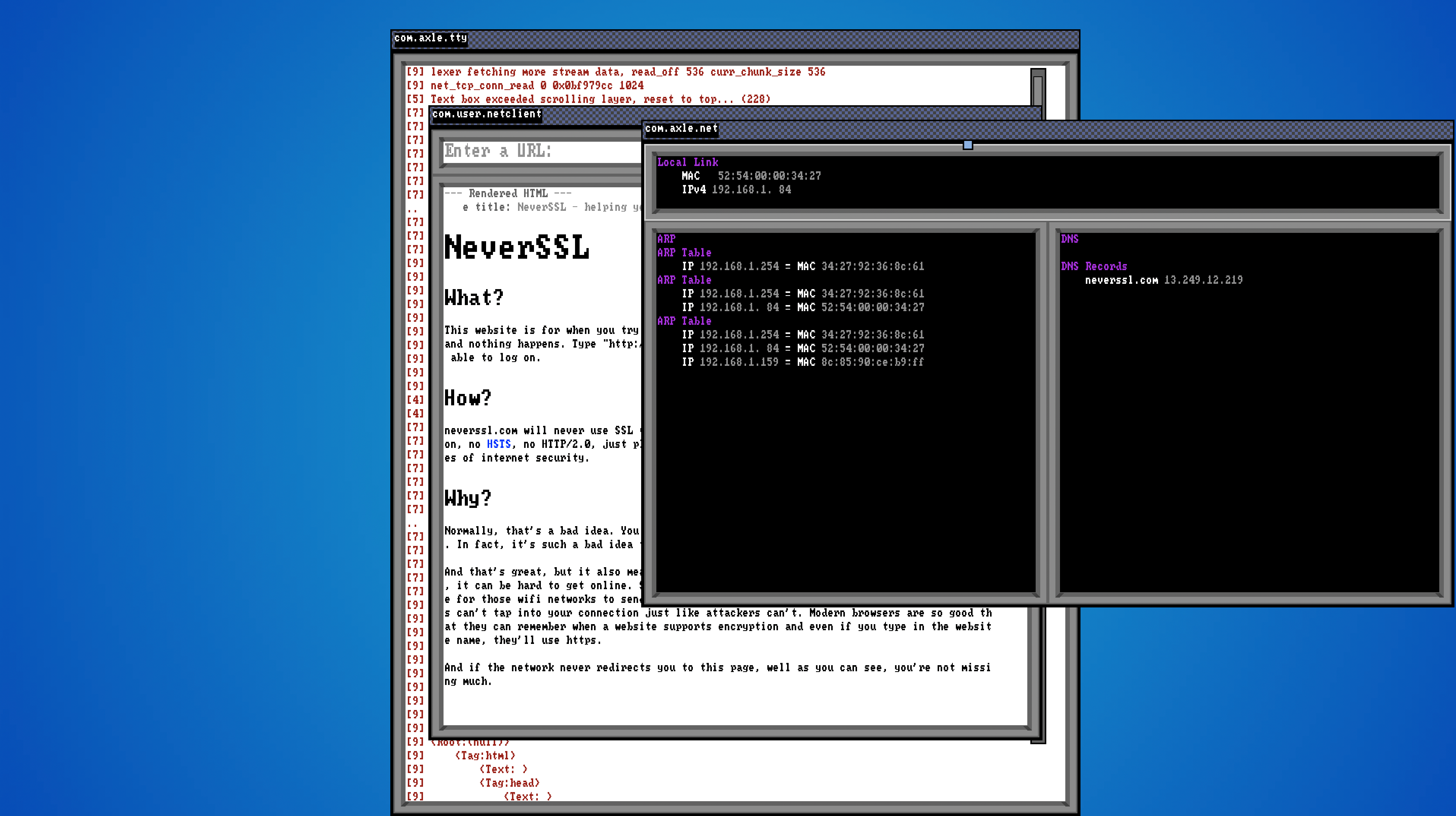

March 2021, UI revamp

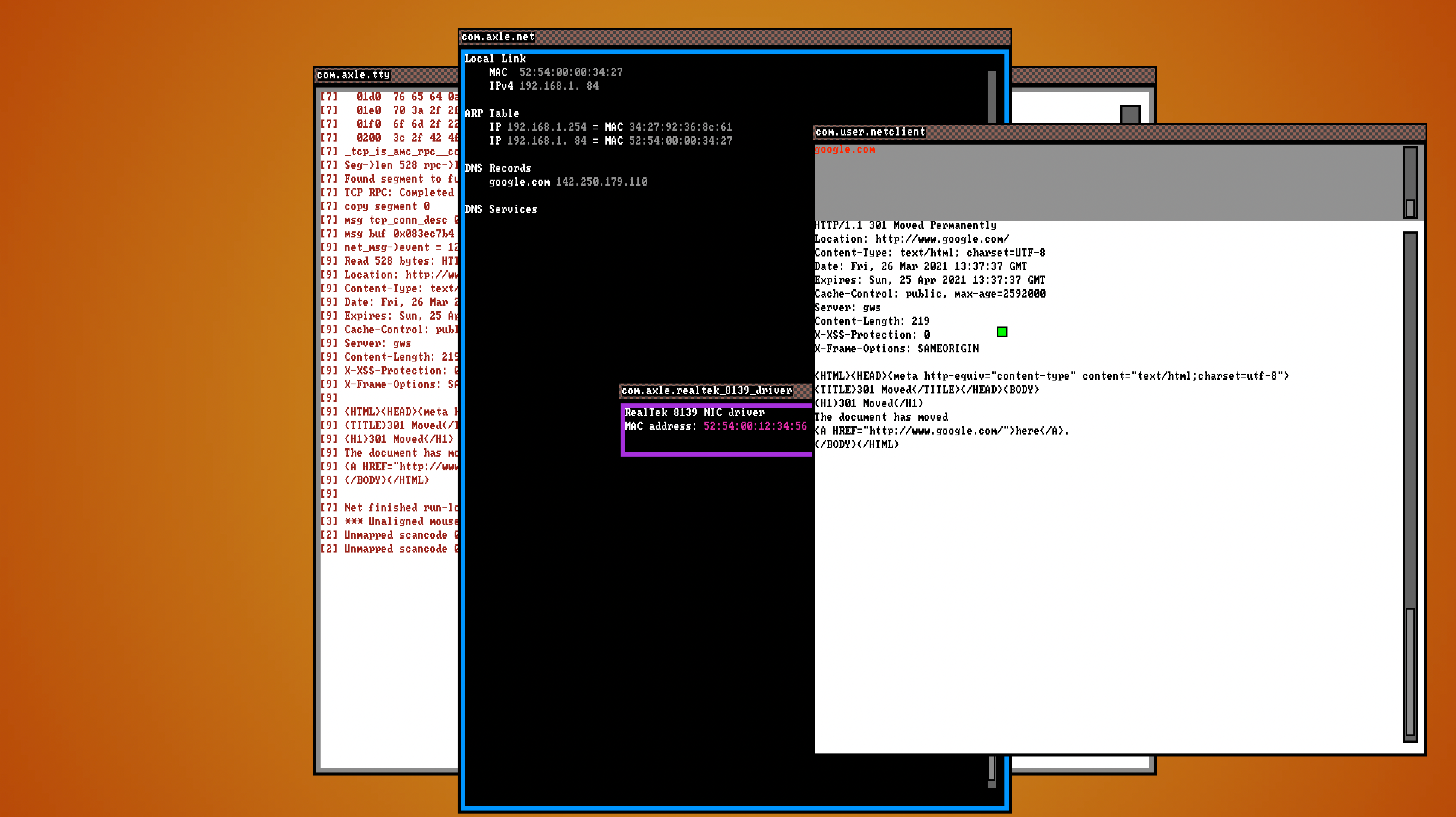

March 2021, fetching remote HTTP via user input

March 2021, rendering neverssl.com

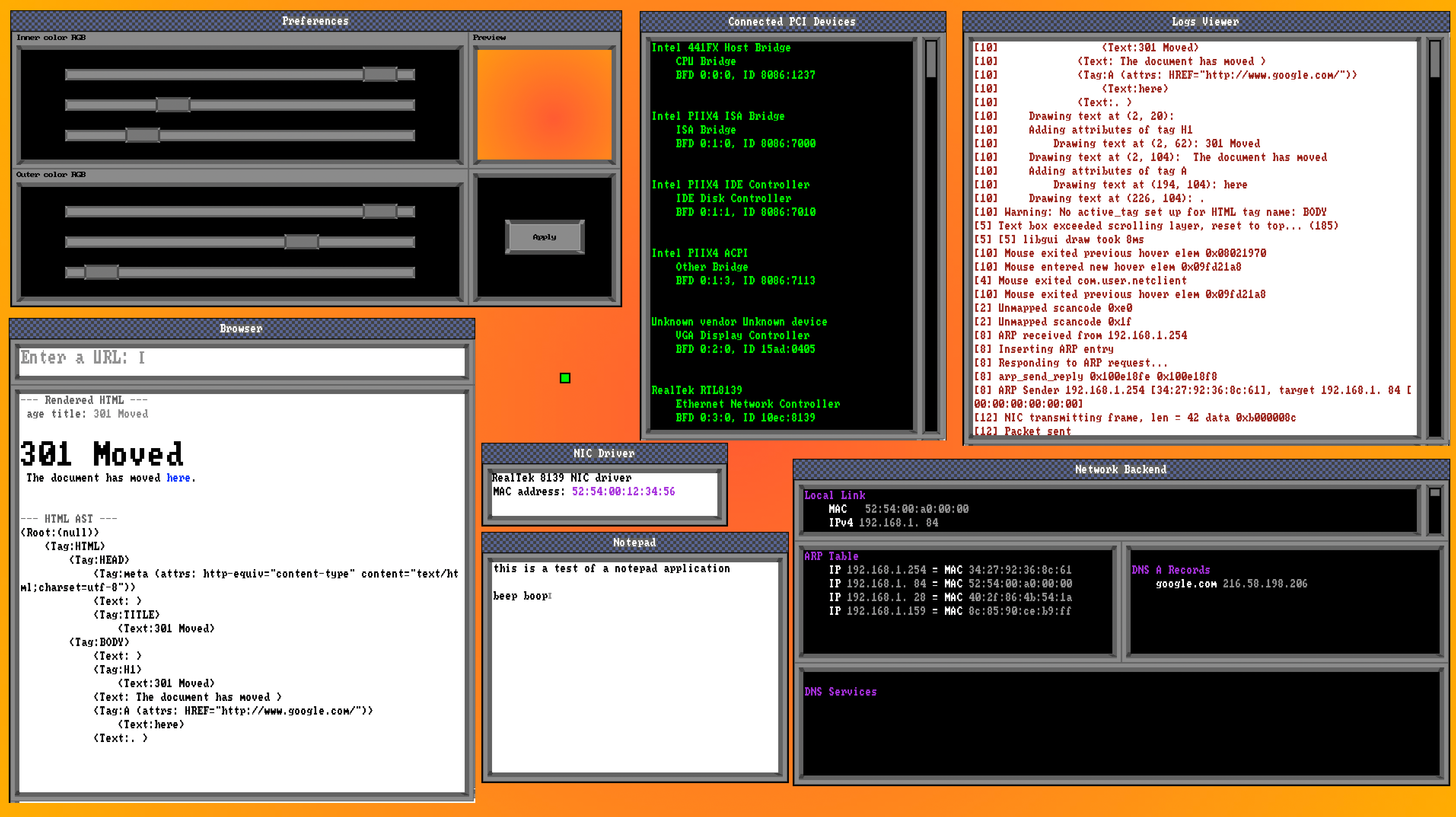

April 2021, redesigned network app and shared UI toolkit

April 2021, UI toolkit and network demo